How Neural Networks Work in Machine Learning

Neural networks are one of the most powerful tools in modern machine learning. At first glance, they might seem complicated, but once you understand the basic concepts, they start to make sense. Let’s break down how neural networks work in simple terms that anyone can understand.

What is a Neural Network?

A neural network is inspired by the way our brains work. Just like how neurons in the brain connect and communicate with each other, neural networks consist of artificial neurons (or nodes) that pass information through layers.

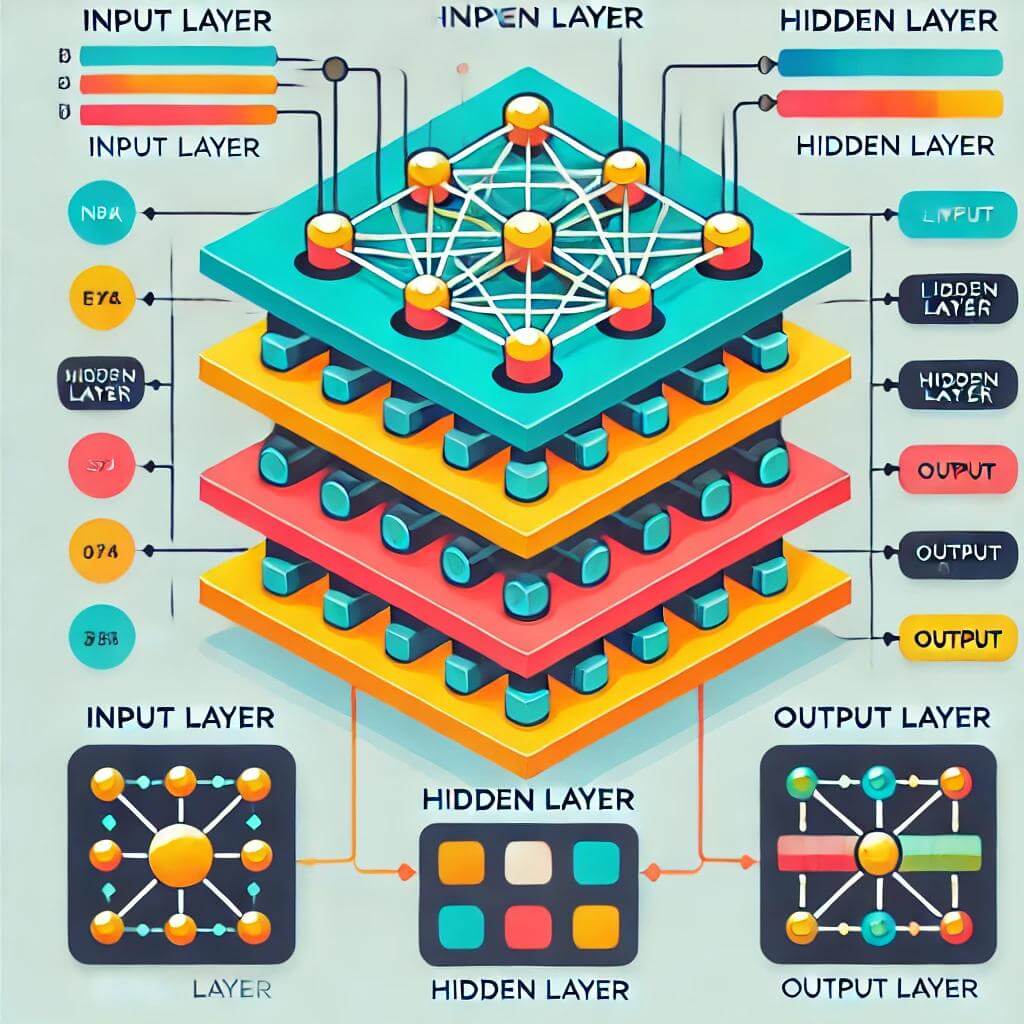

Structure of a Neural Network

A typical neural network is made up of three types of layers:

- Input Layer: The layer that receives the initial data. Each neuron here represents a feature from the dataset.

- Hidden Layers: These layers process the data received from the input layer. Neural networks can have multiple hidden layers.

- Output Layer: The final layer that gives the predicted result.

How Does a Neural Network Learn?

Neural networks learn by adjusting weights between neurons. Every connection between neurons has a weight, and by changing these weights during training, the network improves its predictions. This process is known as backpropagation, where the network adjusts its weights based on the error it makes.

Training Process in Detail

- Forward Propagation: Data flows through the network from the input layer to the output layer, making a prediction.

- Error Calculation: The network compares its prediction to the actual result, calculating the error.

- Backpropagation: The error is sent backward through the network, adjusting weights to reduce the error in future predictions.

- Optimization: An algorithm like gradient descent is used to minimize the error by updating the weights systematically.

# Example of a simple neural network using Python and Keras

from keras.models import Sequential

from keras.layers import Dense

# Create model

model = Sequential()

model.add(Dense(12, input_dim=8, activation='relu'))

model.add(Dense(8, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

# Compile model

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

# Train the model

model.fit(X_train, y_train, epochs=150, batch_size=10)

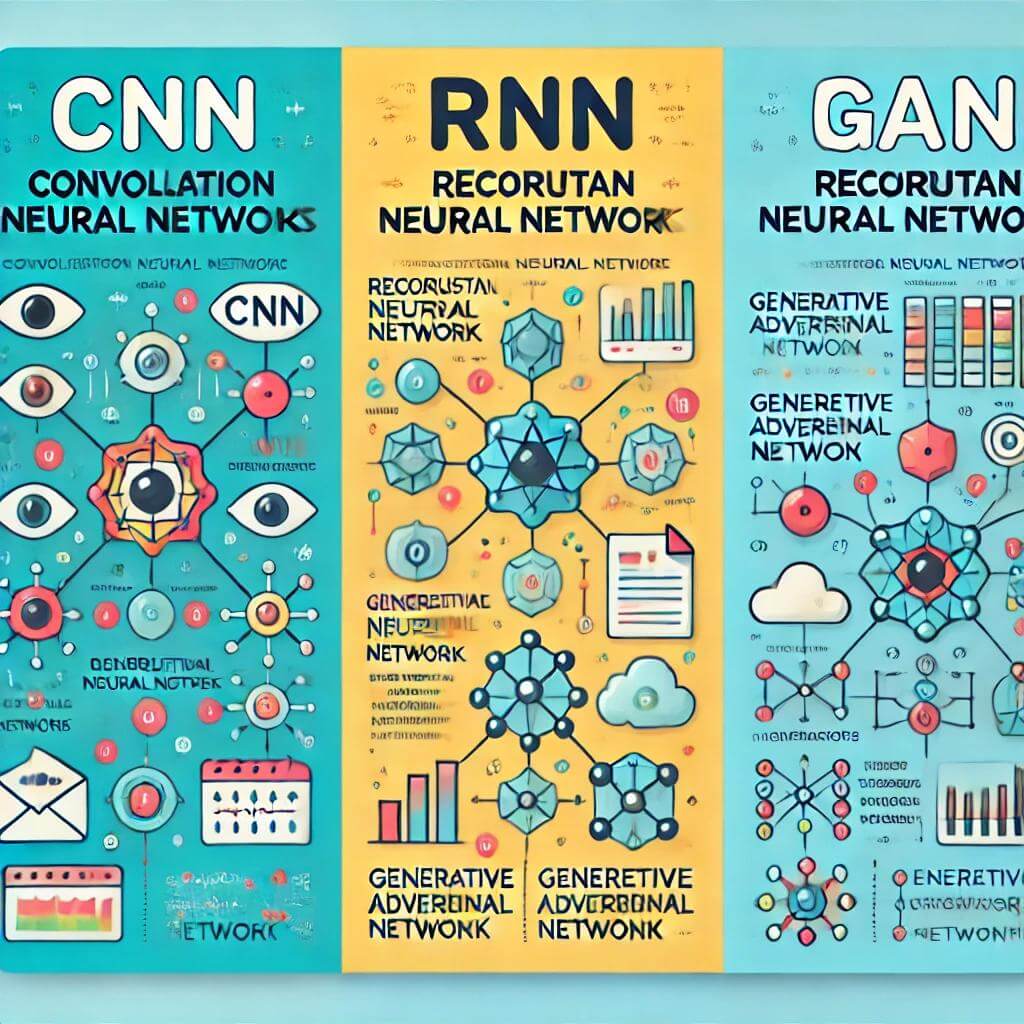

Types of Neural Networks

There are several types of neural networks, each designed for different types of tasks.

Feedforward Neural Networks (FNN)

This is the most basic type of neural network. Data flows in one direction, from the input layer through the hidden layers to the output layer. These networks are often used for simple tasks like classification.

Convolutional Neural Networks (CNN)

These are specialized networks designed for image processing. CNNs are widely used in tasks like image recognition, where they can identify patterns in images like edges, textures, and shapes.

Recurrent Neural Networks (RNN)

RNNs are designed for sequential data, such as time series or natural language. They have the ability to remember previous data points, making them useful for tasks like speech recognition or text generation.

Key Components of Neural Networks

Several components are crucial for understanding how neural networks function:

Activation Functions

Activation functions decide whether a neuron should be activated or not. Some common activation functions include:

- Sigmoid: Used for binary classification tasks. It maps input values to a range between 0 and 1.

- ReLU (Rectified Linear Unit): The most commonly used activation function in hidden layers, especially for deep networks.

- Softmax: Converts outputs into probabilities for multi-class classification tasks.

| Activation Function | Purpose |

|---|---|

| Sigmoid | Binary classification |

| ReLU | Used in hidden layers, prevents saturation |

| Softmax | Converts outputs to probabilities |

Loss Function

The loss function measures how far off a model’s predictions are from the actual values. It’s the feedback the network uses to improve itself. A common loss function is mean squared error for regression tasks and cross-entropy for classification tasks.

Real-World Applications of Neural Networks

Neural networks are used in a variety of real-world applications:

- Image Recognition: CNNs are widely used to classify images and even identify objects within images.

- Natural Language Processing (NLP): RNNs and transformers are used to process and generate human language, from translating text to answering questions.

- Medical Diagnosis: Neural networks help in diagnosing diseases by identifying patterns in medical images or patient data.

Advantages and Disadvantages of Neural Networks

Advantages

- Flexibility: Neural networks can be used for a wide variety of tasks, from image recognition to game playing.

- Automatic Feature Extraction: They can automatically discover important features in raw data, especially in tasks like image recognition.

- Accuracy: When trained on large datasets, neural networks can achieve very high accuracy.

Disadvantages

- Data Hungry: Neural networks require a lot of data to perform well, especially deep networks.

- Computationally Expensive: Training large networks can require a significant amount of computational power.

- Lack of Interpretability: Neural networks are often considered black boxes, making it difficult to understand how they arrive at their predictions.

| Advantages | Disadvantages |

|---|---|

| Highly flexible | Requires a lot of data |

| Automatic feature extraction | Computationally expensive |

| High accuracy potential | Lack of interpretability |

Conclusion

Neural networks are a fascinating area of machine learning that can solve complex tasks. By mimicking the way the human brain works, they can process massive amounts of data, identify patterns, and make accurate predictions. However, they also come with challenges, such as the need for large datasets and the difficulty in interpreting their inner workings.

Post Comment